A week ago the Renaissance expansion for Dominion was released. I opened up a poll asking people to rate each card-shaped object in the expansion from 0 to 10, based on how powerful they thought it was after initially seeing it and without having much time to play with it.

There were 47 responses, which is a great sample size. If you’d like a link to the raw data and the output of my script that computes the basic stats from that data, you can find that here.

A couple of quick notes before I get to presenting the data.

First, I did not submit my ratings for this poll and I asked other people who playtested the expansion who I talked to to do the same. The game designer has asked that we withhold our opinions on this for a couple of months.

I’ll be doing another poll around that time to see how opinions have changed after having some time to play with the cards, and I’ll be submitting my ratings to that poll. When I do the write-up for that poll I’ll provide my own commentary on where I agreed or disagreed with the community’s ratings, etc.

Second, I’ve been doing some thinking about the way this data should be presented, and I’m going to present the data differently from now on. Here’s why: these ratings are subjective in nature and it’s based largely on the fact that there is no “best” definition for what it means for a card to be strong/powerful or weak. If I ask you which of two cards is more powerful, what does that actually mean?

I’ve often said that minor differences in ranking or power level shouldn’t be taken that seriously, and I’ve made it clear that I don’t think this list functions well as a way to directly compare two cards, but then I just go ahead and present the data as a ranked list even though that’s not supposed to be the main takeaway. Why do I do that? That’s just silly. So I’m not going to do that anymore (and maybe if I get time to do it I’ll re-format the results from previous posts). I’ll present the data here as I think it’s most appropriate, and if you really want to see a ranking of the cards you can take the raw data and do it yourself. Just know that I don’t think there’s any value at all in doing that.

OK enough of that rant. Let’s see some data!

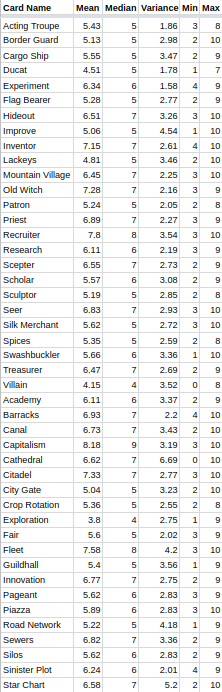

First, I’ll present the list of all ratings with relevant stats. It’s sorted not by mean this time, but we have the cards first (then the projects) and then they’re sorted in alphabetical order. Here it goes:

Keep in mind that if something has a high variance, the median score is often a better metric than the mean score for getting a more accurate representation of the data. Also, keep in mind that there is even less of a clear idea of what makes a Project powerful than there is for making a card powerful, so as expected we see slightly higher variance for those.

Hopefully you can take a look at this list and see for any given card what the approximate opinion is about that card.

I’d also like to point out that the mean scores have a range of 3.8 through 8.18, which is less than 4.5. I think that this means the first impressions of the Renaissance cards are fairly uncertain at this point — if a card is particularly strong or bad, many people haven’t figured that out by just looking at it (and for the record I gave out scores as low as 3 and as high as 10 for my own personal first impressions — I believe the power is there).

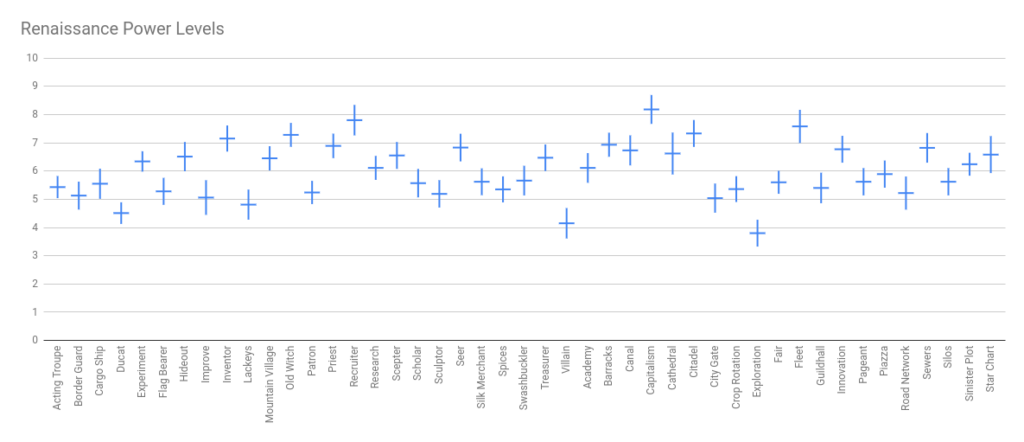

Here’s a second way to look at the data, in chart form:

The data here is the same, but the way it’s displayed is designed to give a better idea of how the variance plays into things. The horizontal tick for each card represents the mean score, and the bar going up or down represents the 95% confidence interval for that rating. Statistically, this means we should be 95% confident that the “real” rating of the card lies within the range of that vertical bar.

So cards with less agreement have bigger vertical bars, and cards with more agreement have a smaller range.

So here’s the data, I hope this is enlightening for you in some way. If there’s a big disagreement between your rating and the consensus, it should be an interesting discussion to hash out where that comes from. In a few months I’ll be making another poll and comparing those results to this post, so stay tuned!

Leaving the scores unsorted makes sense. I support that.

Even though I haven’t actually played with any of the cards yet, I’m pretty sure I would already rate them very differently, just based on hearing discussion about them. I was persuaded that Cargo Ship and Scepter are likely much better than I gave credit for. Definitely not convinced about Canal though.